CREATING LIGHTING IN A 2D GAME

So far, D.O.R.F. uses 2D sprites as in most classic RTS games, with models created from 3D renders taken from different angles to give the illusion of 3D dimensional movement. This currently forces us to use baked-in lighting, with all objects and their cast shadows being affected by sunlight from a fixed position, and shadows themselves are just a flat image with no actual depth data. While this is simple to set up, it does create issues; as can be seen in the above image, it can result in artifacting, such as shadows being cast flatly onto objects in a nonsensical way, or shadows not casting onto objects at all (see the shadow from the tree casting onto the trash can in the lower right of the image). There are also all sorts of depth-sorting issues, such as sprites rendering above or below objects in a nonsensical way (for example a unit entering a building but then still appearing overlaid on the building rather than disappearing beneath its roof). This system isn’t ideal.

While you may think of 2D games as inherently possessing no lighting functionality, or at least, limited lighting functionality, this isn’t necessarily the case. Currently, we are working on a method to dynamically light objects and allow them to cast shadows realistically, while still effectively being 2-dimensional sprites. This is done via the magic of normals sprites and depth sprites. Here is how the process works.

Here we can see a sprite for the powerplant. This is called the Diffuse sprite. This is the most important sprite, since while it possesses no lighting information, and itself is flatly lit, it’s the most obvious piece of visual information for the Powerplant structure ingame. Of course, to light it, we’ll need some additional sprites.

(Oh, and if you are wondering about the magenta-colored accents, those are what the game uses to determine team colors, though that’s a topic in of itself)

Here we have the Normal sprite. Any 3D artists or aficionados of how modern games work under the hood should recognize this concept, as it’s almost universally used in 3D games now, albeit as a texture applied to a 3D model. Essentially this uses color information to determine facings (so cyan = up, magenta = southeast, blue = southwest, etc). This is what will be used to give the 2D sprite 3-dimensionality, so that light hitting the surface will be applied to each surface correctly, rather than affecting the surface as though it were a flat texture.

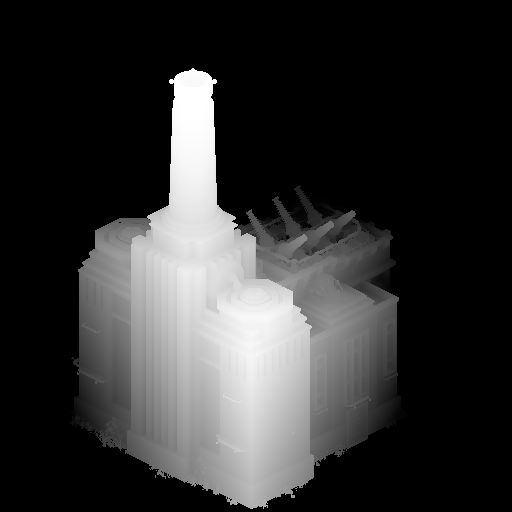

And here we have the Depth map (alternatively called Z-Depth). This uses grayscale values to determine distance from the camera (with lighter = closer and darker = farther), and while it also has some application with lighting and shadowing, it will primarily be used in depth-sorting, so that the game engine knows which sprites to render over or under which other sprites. This will ideally cut down on all the Z-fighting issues and problems with objects rendering on-top of other objects incorrectly.

Lastly, we have the most experimental aspect of this system, called the Micro Z-depth sprite, or alternatively the Shadow Sprite. Using a series of Z-depth frames rendered from specific angles, the game will take this Z-depth information and use it to create a 3D mesh on the fly. This mesh will be invisible ingame, but will function as a means from which 2D sprites will cast functional, dynamic shadows onto objects from light sources.

Note that there are some unanswered questions with this specific method of casting shadows. Chiefly, this system is largely experimental, and it’s not totally clear yet how this will actually function ingame; there may be cases where the shadow mesh generated does not quite match the intended shape of the object, and there may be cases where the shadow mesh incorrectly envelopes the object it is supposed to be casting from. There are also potential issues with animated objects, such as infantry units and mechs, or a gate’s closing and opening animation, where each frame of animation requires an accompanying shadow mesh.

While this sounds like an astronomical number of frames, this isn’t necessarily true; the Micro Z-depth frames can be significantly smaller than whatever they are supposed to be cast from, and in regards to unit rotations, a single type of animation only needs the 8 angles of view for the Micro Z-depth frames to work. So for instance, a mech with 32 facings, with 16 frames per each walk cycle animation, would only require 128 Micro Z-depth frames, rather than 4096, as it only needs to capture enough data to create the mesh for each frame of the animation cycle, but since it is a 3D mesh, it can simple be rotated to account for each of the 32 facings. It’s for this reason that a tank would only need 8 Micro Z-depth frames to render a shadow mesh from; even if the tank has 32 or 64 frames of rotation, the shadow mesh can simply be rotated to fit all these angles. Of course, if a tank has a turret, that will also need its own series of Micro Z-depth frames.

Also, as you may expect, this also allows more lighting options for D.O.R.F. Some maps could have their sunlight position shifted to different angles, allowing for the creation of more believable and atmosphere maps at odd times of day. It also allows for objects to create light dynamically, such as the headlights of vehicles, or lights cast from certain structures. Another major advantage of this system above just having a fully 3D game is that this method of lighting/shading is extremely efficient for creating lots of lights at once. While most 3D games struggle with even a few light sources in an instance, this method of lighting/shading means maps could potentially have dozens (possibly hundreds) of light sources.

As this system is still in development, it will likely be a month or two before we will have publicly demonstrable results, and it will be an enormous amount of rework to re-render every single asset in the game so far to account for this new system.